Operation

| Name | Value |

|---|---|

| Repository | https://github.com/anagolay/anagolay-chain/tree/main/pallets/operations |

| Pallet | Yes |

Operation

Traditionally, libraries are created and distributed in many different formats with many different interfaces by many different people. With the lack of standardization, the net result is the lack of library connectivity and the burden on the user to build and maintain new libraries which internally connect more libraries.

Anagolay solves these problems by providing a solution that runs on any WASM-able environment.

Within the Anagolay Network context, Operation defines a library, its name, inputs outputs, dependencies, and its type. In order for all Operations to talk to each other, we need to standardize a few things. First, we need to have a strict definition of the Operations' input and the output parameters. We also want to be able to chain or link many Operations to produce more complex behavior which is only possible if the chained ( linked ) Operations can understand their mutual inputs and outputs.

Operation is an abstraction that represents one task in a sequence of tasks, a Workflow.

The Operation execution is not necessarily tied to any blockchain, but some of them are capable to run in no-std environment, thus, on-chain. For Example, Anagolay is using a Workflow to generate the CIDs on-chain.

An Operation MUST produce the same result on Earth as well on Mars or anywhere else in the Universe given the same data.

An Operation is made of compiled code with WASM bindings so that it can be used by both the JavaScript engine and the compiled Rust code.

Types:

- An operation may define its own types to use as inputs and output. This is convenient in order to create them natively or from WASM environment.

Functions:

- The execute function: entry point of the Operation. It accepts a collection of inputs to process and the configuration of the execution. Aside from its implementation, the code also deals with deserialization and serialization toward the WASM world.

- The describe function: returns the manifest data of the Operation, more on this in the following paragraph.

The Operation manifest

While the describe function produces the manifest data, this data along with a content identifier (CID) represents the Operation manifest which is stored on-chain. The manifest allows introspection of the Operation interface since it contains the following information:

id:wf_cid1_from_bytes(data)dataname: a human-readable unique identifier, name of the operationdescription: a brief description of the operation task objectiveinputs: a collection of data type names defining the expected input parametersconfig: a map where keys are names of configuration parameters and values are collections of strings representing allowed valuesgroups: tells which groups the Operation belongs to, also controlling the execution flowoutput: data type name defining the operation outputrepository: The fully qualified URL for the repository, this can be any public repo URLlicense: short name of the license, like "Apache-2.0"features: defines what features the operation supports (like disabling std capability)

{

"id": "bafymbzacidtsdjfehszh2vqudmraf5phevmqbjl5fnlfhpc6eqwopdzninhdov3r64mpnaoyeu6hjulnrmgttha4pddyny3zyjv6utoddozql3p7",

"data": {

"name": "op_multihash",

"desc": "Anagolay operation to generate multihash.",

"input": ["Bytes"],

"config": {

"hasher": ["Sha2_256", "Blake3_256"]

},

"groups": ["SYS"],

"output": "op_multihash::U64MultihashWrapper",

"repository": "https://gitlab.com/anagolay/operations/op_multihash",

"license": "Apache 2.0",

"features": ["std"]

}

}

Manifest generation

In order for the Operation to produce its own manifest from the code, the following steps are necessary:

Annotate the execute function

// This allows us to fully qualify the output type

use crate as op_multihash;

use an_operation_support::describe;

##[describe([

groups = [

"SYS",

],

config = [

hasher = ["sha2_256", "blake3_256"],

],

features = [

"config_hasher",

"std"

]

)]

pub async fn execute(

bytes: &Bytes,

config: BTreeMap<String, String>,

) -> Result<op_multihash::U64MultihashWrapper, String> {

// ...

}

The attribute describe provides additional information not available in the Cargo.toml or in the execute function signature, like the groups the Operation belongs to and its configuration.

Note that the function asynchronously returns a Result that is finally bound to a Javascript Promise in the WASM implementation.

While the support for no-std is assumed to be disabled by default, it must be declared here as std feature if it’s available: This means that it will be possible to turn on and off the std capability by enabling such a feature.

Another useful application of the features switch along with configuration is conditional compilation when built in a Workflow: by defining a feature prefixed by config keyword and concatenated with the configuration key (hasher in the example) it’s possible to enable the Cargo feature respective to the selected configuration (config_hasher_sha2_256 or config_hasher_blake3_256 according to the configuration passed to the Operation).

Make the execute and describe and execute function available to wasm

use an_operation_support::{from_map, from_value, to_value};

##[wasm_bindgen(js_name=execute)]

pub async fn wasm_execute(operation_inputs: Vec<JsValue>, config: Map) -> Result<JsValue, JsValue> {

let input: U64MultihashWrapper = from_value(operation_inputs.get(0).unwrap())?;

let config = from_map(&config.into())?;

let output = execute(&input, config).await?;

to_value(&output)

}

/// Output manifest##[wasm_bindgen(js_name=describe)]

pub fn wasm_describe() -> String {

crate::describe()

}

Make the manifest available as build target

A common implementation of the main method is provided by an_operation_support::main. It’s sufficient to pass the arguments, including the describe function, so that the program argument -m or --manifest will produce the manifest data as output

use op_file::describe;

fn main() {

let main_args = an_operation_support::MainArgs {

app_name: env!("CARGO_PKG_NAME"),

app_version: env!("CARGO_PKG_VERSION"),

describe: &|| crate::describe()

};

an_operation_support::main(&main_args);

}

Versions

When we create and publish the Operation, we are creating the on-chain Manifest and initial Version.

It may happen that one Operation depends on the types of another Operation, as a dependency in Cargo.toml. In this case, it should use the git resolution for that dependency indicating the repository from the dependency manifest. By doing so, when the Workflow is built, the appropriate version will be patched for the dependent Operation.

For example, op_cid declares a dependency on op_multihash in the following way:

op-multihash = { version = "0.1.0", default-features = false, features = [

"anagolay",

], git = 'https://gitlab.com/anagolay/operations/op-multihash', optional = true }

This dependency will be patched to the correct Operation Version of op_multihash in the Workflow build.

Interfaces

We distinguish between the following interfaces for an Operation implementation;

- WASM boundary

- Remote APIs

the advantage of formally defining such interfaces is that every Operation must comply with the same definitions. In turn, this means being able to create a complex flow of Operations (a Workflow) and execute them automatically. This also allows fine control of execution, for example, the possibility to either compile a whole Workflow in Rust or dynamically execute each Operation from a Javascript engine through their WASM interface.

WASM boundary

The Javascript engine can access only methods and types that are exported in the bindings, which are:

Types:

- input and output types: for

op_multihash, for instance, this would be a serializable wrapper aroundMultihashGeneric<multihash::U64>since the latter is not serializable. Conversely, if the required input or the execution result is already serializable, no custom type is needed

Functions:

The following functions are WASM binding of the Rust respective implementation and deal basically with serialization and deserialization.

- describe

- execute

Dependencies on other Operations

A custom Operation implementation can rely on already existing types and methods and on the previous execution of other Operations to produce the required inputs. Thus, there are two distinct approaches to dependency management:

- Compile-time Operation dependencies: managed by Cargo, included without default features but with the feature

anagolaywhich reduces to the bare minimum the amount of code incorporated in the build. This kind of dependency is needed when you want to reuse existing code from another Operation. - Run time Operation dependencies: identified during Workflow execution by looking at operation definition. This kind of dependency resolution is at Workflow level, meaning that to produce a consistent result the execution of linked Operation happens according to their dependency chain, and the output of the first execution is propagated by the Workflow to be the input of the next.

Remote APIs

It's possible that the execution of the Operation requires remote services, but not always desirable. In fact, this introduces a point of failure in the reproducibility of the Workflow because of side effects on remote systems. On the other hand, provides greater flexibility.

This is still compliant with the philosophy that, given the same or similar input, the Workflow will always produce the same result even if this input comes from a remote API invocation.

Examples of remote invocations are:

- External APIs or services

- Loading of a file from an URL (since standard file support is not available in the WASM)

In order to comply with Remote APIs invocation, all Operation execution happens asynchronously. This means that the caller is not blocked until execution completes.

Types and Functions

Types and functions provided by

an-operation-supportcrate are used by all Operations. They provide a common ground to implement the usual functionalities.- Primitive types like

BytesandGenericIdand data model of Workflows and Operations - Input and Output (de)serialization functions

- Describe macro

- Main method for command line implementation (manifest data generation, etc.)

- Primitive types like

Standard Operations all expose the same behavior, which means:

- Input and Output data types

- must encode and decode themselves to and from

JsValue

- must encode and decode themselves to and from

- The describe function:

- must provide the Operation manifest data

- The execute function

- must accept a collection of input and configuration parameters map and return one result

- Input and Output data types

FlowControl Operations are special in nature since they have a number of inputs which is known only at the time the Workflow manifest is generated. Consequently, the output is unknown in the Operation manifest data, too.

They are processing a logical function, outputting all or some inputs in a different form. Some example Operations from this class include:

- collect: aggregates all input into a collection, returned as output

- identity: outputs the exact input. This is useful when inside a Workflow the same external input is necessary for several Operations without the need to request it several times

- match: matches all, or any or none of the input and produces an error if the condition is not met.

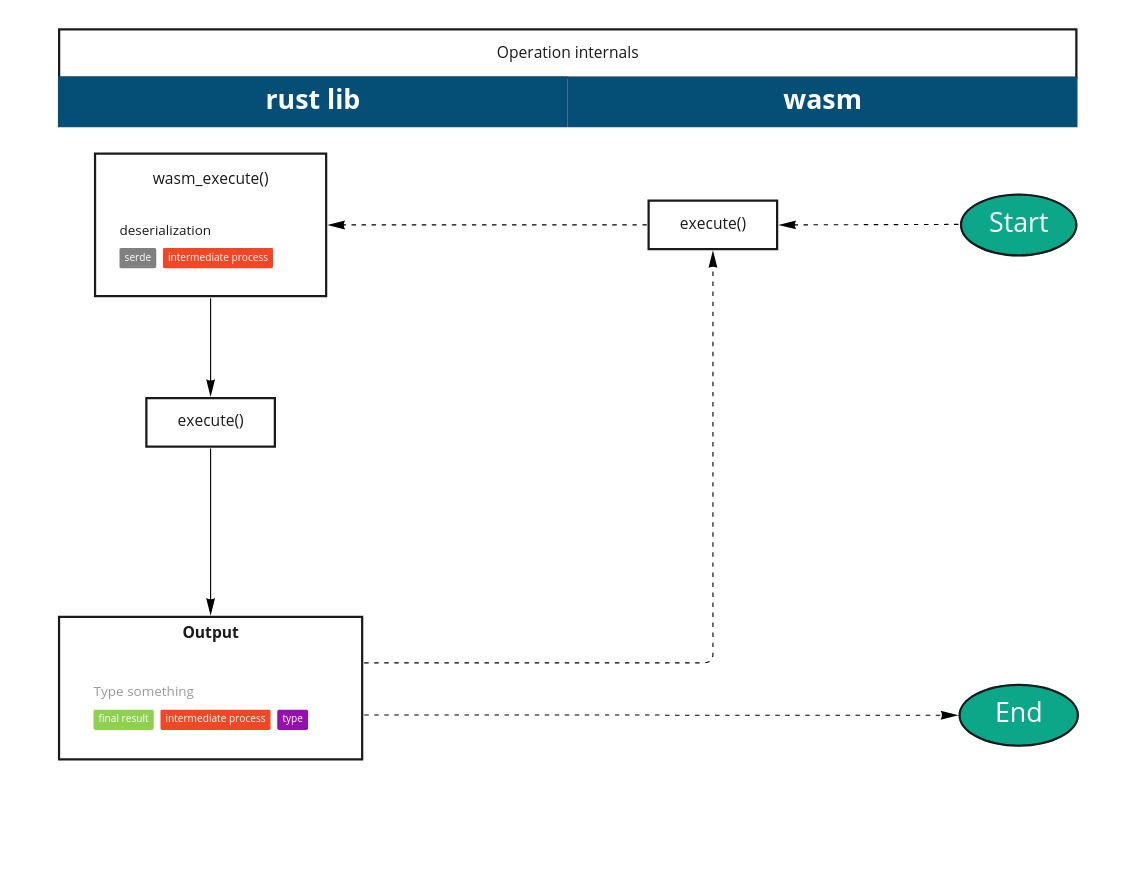

Operation code structure and data flow

Each Operation execution produces an output that can be used as input to execute a subsequent Operation. In order to be able to pass the WASM boundary, the output is serialized to be passed as input of the next execute() function, where it gets deserialized.

While in the execution function, deserialization of the expected input happens:

When the execution flow is over, the final result is retrievable from the execute method. Assuming that both the array of inputs and the configuration are defined in context and the execute function of the Operation is imported, here goes the call in a Javascript environment:

const output = await execute(inputs, config);