This article is part of the Web3 Grant Program PR 719 deliverable.

Overview

Anagolay is a peer-to-peer network that stores records of Rights (Copyright, Licenses, and Ownership), Restrictions, and Proofs of any digital content. It empowers users to store, claim, sell, and rent their work with the correct transfer of Rights and usage of Licenses. Actual digital data is never stored on the chain, only its cryptographic or perceptual proof. As such, the Proof acts as an identifier, verifiable by users who have access to the same data without disclosing it in the process.

Why Rights are important to the distributed web?

The most obvious example where this approach is a game-changer is the NFT marketplaces. Especially when it comes to the definition of uniqueness. NFT is considered to be unique, but it is not, the identifier is unique, not the content. It's possible to mint the same image as an NFT on different marketplaces ( Kelp Digital Are NFTs as Unique as We Think? ) and on some marketplaces, you can mint multiple times slightly modified versions of the same image. This alone states that there is currently no way to ensure uniqueness. What we are building is a way to determine the uniqueness of digital content. With Anagolay implemented, NFTs will become obsolete and either die out or evolve.

How does our solution improves on the current state-of-the-art? We rely on the identifiers (the Proofs, as a plurality of indicators) of the content rather than on incrementing a single value which is obtained through the minting process. The third parties (including current NFT marketplaces) will instead be able to query Anagolay Network to see if these Proofs match any records with claimed Copyrights or Ownerships.

The use-cases go way beyond digital images and photography. Anagolay’s proof-based IP verification technology could be similarly applied in the music industry and video production, disrupting these markets. Imagine being able to license a musical score as part of a composition, or a cut of a video (even only a few frames) and always being capable of reconducting this work to its legitimate author and paying him royalties.

Anagolay approach to Rights management

Anagolay associates several identifiers of authenticity (we call them Proofs) and allows to verify the correctness of a claim against such identifiers. Computing the identifiers is a repeatable process that always returns the same output no matter where or when the computation executes as long as the user provides the same input data. The execution consists of several tasks, called Operations. When connected together, they make up a Workflow.

Operations and Workflows

Operation environment

Operations must be versatile and easy to integrate; this is why we are compiling them to WASM which can be used in almost any environment. The Operations are written in Rust with the intent of executing and including them natively in Rust projects with or without nostd capability. This wide spectrum of execution environments has great potential and multiple applications. To make it possible, it’s mandatory that all code is compliant with a common specification that facilitates the integration and deployment:

- The Operation has an immutable manifest, which defines the interface used to interact with its inner implementation (inputs, output, configuration...). For the time being, newer versions of the same Operation must always comply with the initial manifest.

- Manifest also allows declaring

nostdcapability; executing without the Rust standard library is desirable in several contexts, like in embedded systems, or in a blockchain that can be upgraded without a hard fork (more on the blockchain technology we use below). - There are WASM bindings to invoke the Operation from a javascript engine. All execution is asynchronous so that the caller is not blocked until the Operation produces its output

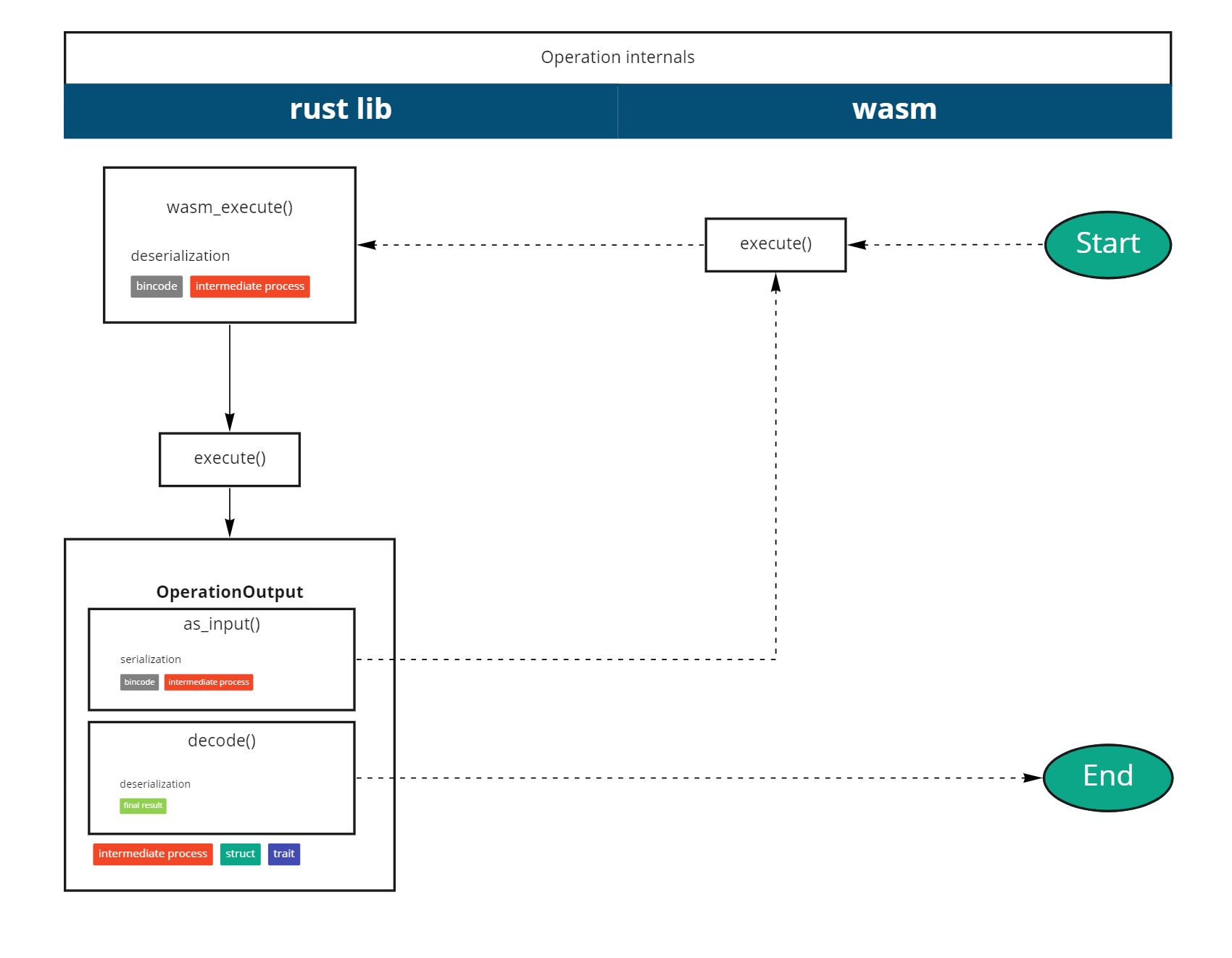

- The output of an Operation may be transformed into an input of a subsequent Operation. To cross the WASM boundary, the previous output must be serialized to perform the subsequent invocation and then deserialized inside the subsequent Operation implementation. This process strives to be performant and we are using a near-to-memory (de)serializer called bincode to achieve the least performance loss. This allows Operations to be sandboxed and share no reference in memory between each other

Specification

Writing a custom Operation should be simple since they all follow the same pattern. In Milestone 2 we will implement the Anagolay CLI command for Operation scaffolding.

Operation manifest

While the describe function is producing the manifest data, this data along with a content identifier represents the Operation manifest which is stored on-chain. The manifest allows introspection of the Operation interface because it contains the following information:

id:cid(data)dataname: a people-readable unique identifier, name of the Operationdescription: a brief description of the Operation task objectiveinputs: a collection of data type names defining the expected input parametersconfig: a map where keys are names of configuration parameters and values are collections of strings representing allowed valuesgroups: a switch used to generate the Workflow segmentsoutput: data type name defining the Operation outputrepository: The fully qualified URL for the repository, this can be any public repo URLlicense: short name of the license, like "Apache-2.0"nostd: defines if the Operation execution in anostdenvironment is supported

As shown above, we use content identifiers to generate the id as a cryptographic hash of the data. Thanks to this approach we can confidently verify the immutability of such data at any moment.

WASM bindings

The following bindings are available to be invoked not only natively but also from a javascript engine:

describe(): generates the data of the above shown manifest directly from the source code, in order to avoid discrepancies between the displayed manifest and the internal implementationexecute(): accepts the serialized inputs, the configuration and returns a javascript Promise. Internally, it invokes the Operation business logic

Execution

The op_file operation business logic is to fetch a file from a remote URL and return a byte buffer out of it. This simple task must be successful in a variety of situations but unfortunately cannot work in a no-std environment due to the lack of network interface in such context. It does work, though, in the browser, in nodejs whether a Fetch API polyfill is available, and natively in Rust. The following generic diagram shows how the execution works for op_file and for all the other Operations to come:

In the next Milestone, taking advantage of this consistent interface, we will be able to automatically chain Operation execution according to the Workflow definition.

Anagolay blockchain

Like many modern distributed systems, Anagolay uses a blockchain to keep the state of the events happening and to register entities created by peers. We’ve built our chain on top of Substrate, which guarantees enough flexibility to develop our own solution and provides a robust, modular approach to blockchain development. We’ve implemented the following modules, called pallets in Substrate terminology:

anagolay-support: provides common functionalities like types definition and content identifiers computation.an-operations: provides the extrinsic API to publish an Operation in its initial version. While at the moment no other feature is implemented, this pallet will cover the scope of managing and maintaining Operations.

Versioning

Operation code, as all source code, evolves over time; to fix bugs and update dependencies. For this reason, it’s not an Operation itself that is part of a new Workflow, but one of its versions (typically the latest). In the future, versions will be subject to community review and approval, in order to make sure that a flawed one does not go mainstream. The reviewers will be rewarded with Anagolay tokens. On the other side, we will request spending tokens for Operation creation, since it is a resource-intensive operation.

These are the required steps:

- rehost the source code, the Operation artifacts, and the documentation on IPFS

- produce the Operation manifests and its version

- invoke the blockchain extrinsic with the manifests to store the new Operation

The Operation Version manifest looks like this:

id=cid(data)dataoperationId— Operation ID where the manifest is locatedparentId— present only in case of a non-initial (improved) implementationdocumentationId— using our rehosting service, rehosts the generated documentationrehostedRepoId— using our rehosting service, rehosts a specific REVISIONpackages— a collection of packages as defined in the[PackageType](https://github.com/anagolay/anagolay-chain/blob/main/pallets/operations/src/types.rs#L104){}— ready to include the dependency inCargo.tomlpackageType— possible options areCRATE,WASM,ESM,WEB,CJSfileUrl— this should be a compressed tar file ready for extraction (without.gitsubfolder) available from the IPFS or as a URN (potentially https://ipfs.anagolay.network/ipfs/bafy...)ipfsCid—bafy...

- ...

extra— a key-value pair for adding extra fields that are not part of the data. In our case, it is thecreatedAtbecause recalculation of the CID is not possible in verification state since the time never stops, it always goes forward.

Here is the real-world example for the op-file stored Version:

{

id: bafkr4igwyuzewkfeno3jhxiscazah2dx62fswowhira5omzgrugdskregm

data: {

operationId: bafkr4ifsdakwrkew2aftprisolprbeipardj4mkoskder7vwtlqsuqhfqu

parentId:

documentationId: bafybeiahkoghy4yozvqrp5q66mitk6kpxdec243mdzcel67jdt3gcyffi4

rehostedRepoId: bafybeicjzmc4wim46lbkgczoiebcnwxpj4o34zlfs4lif4vkkduxbkigdy

packages: [

{

packageType: ESM

fileUrl: bafybeidjm5wmgsk57w2n3cdduvebkyrtvq72kzka77gsmdcgm4pj7j33cq

ipfsCid: bafybeidjm5wmgsk57w2n3cdduvebkyrtvq72kzka77gsmdcgm4pj7j33cq

}

{

packageType: WEB

fileUrl: bafybeiewbvlvqsttbewlobg7hjr2byy5qiuhplgm5lgdzz3n3sllz4tvmm

ipfsCid: bafybeiewbvlvqsttbewlobg7hjr2byy5qiuhplgm5lgdzz3n3sllz4tvmm

}

{

packageType: CJS

fileUrl: bafybeichmqblpa2hrzvobnijnhtptygj432e2mitxhxyj7rsu6kpkslqx4

ipfsCid: bafybeichmqblpa2hrzvobnijnhtptygj432e2mitxhxyj7rsu6kpkslqx4

}

{

packageType: WASM

fileUrl: bafybeifr7d7i2cowwth2udwhk3rbchbjp2agrqeeemxw4zcap77d6tehli

ipfsCid: bafybeifr7d7i2cowwth2udwhk3rbchbjp2agrqeeemxw4zcap77d6tehli

}

]

}

extra: {

createdAt: 1,646,325,294

}

}

Once again we use the content identifier of the data as the id of the version. Since the manifest data contains the operationId, this makes sure that the association between an Operation and a version is immutable and uniquely identifiable.

IPFS rehosting for Content Versioning

On the IPFS website, they define IPFS to be “a peer-to-peer hypermedia protocol designed to preserve and grow humanity's knowledge by making the web upgradeable, resilient, and more open”. Practically speaking, it’s a file system where resources are not identified by their path, but by their content. This approach, where the versions are tightly coupled with their published content and, by nature of the storage, highly distributed, is called Content Versioning, or CVer. It gives us the 100% assurance that we will always get the same code behind a given version ( CID ), which is not true with other approaches, especially talking about NPM space, where you can host your repo and modify the code under the same semantic version and push that as a trustworthy registry to all the users. CVer is basically the directory on the IPFS network configured properly with IPNS to always point to the directory where the module is stored. The structure is very simple, a list of the directories with the rehosted bare git repositories, corresponding zip, tar, or other archives which are extracted after download. The names of the archives are fully-fledged CIDs and unique across time and space.

CVer is not meant to replace other versioning methods, it’s rather a different approach when assurance about the content is important. Maybe in the future, there will be a hybrid approach.

Speaking of assurance about the content, which for source code implies assurance about the behavior, there is another issue: dependencies.

Today, we use centralized registries like npmjs.com and crates.io, which are a single point of failure. When we are using a library we are trusting the developers to publish the packages with correct versions and in a correct way. We also trust that they trust their dependencies, recursively. Given all that, we must also trust the package manager, like npm, yarn, pnpm, or cargo to properly resolve the version. But what happens when a deep dependency is buggy, gets sold, and a bad actor ships some malicious code in a patch version? You know the answer, and it's scary. You can prevent shipping the package-lock file and using the dependency resolution for that lock file, but that is inefficient and still not fault-proof.

We realized that current dependency linking is not going to cut it for our approach, in which we want to have 100% certainty that we are executing the 100% same code, regardless of whether we create or verify the results. The solution comes from the adoption of Content Versioning and immutable package versions and git repositories. This method is called rehosting.

Additionally, we want the proofs to be created and verified anywhere and at any time. An example would be that an astronaut creates a Proof on Mars and we want to verify it on Earth. This is not possible with the currently widespread approach using the centralized registries unless the registry is duplicated and synchronized performantly in every place where Proofs need to be created or verified.

Publisher Service

This micro-service duty is to rehost the source code, the Operation artifacts, and the documentation on IPFS, allowing the caller to produce an Operation Version manifest. It exposes a REST API to schedule a publish job and check its status periodically. It’s intended to be polled by the Anagolay Command Line Interface (CLI) whenever an end-user decides to publish an operation. As said before, since the process is resource-intensive in the future the access will be granted upon payment of a fee.

These are the steps of the publishing of an Operation:

- clone the git repository indicated in the request

- verify that the code is formatted according to our coding standards

- rehost the cloned git repository on IPFS

- generate the Operation manifest

- build the Operation code and produce the Crate, WASM, and ESM artifacts

- upload the artifacts on IPFS

- generate the docs and upload them to IPFS

- cleanup the working directory and return the CID of every rehosted content

Anagolay CLI

The starting point to publish an Operation to the Anagolay network is the CLI. It’s an assisted interactive command-line interface that deals with authentication, publishing, extrinsic invocation in a way that is mostly transparent to the end-user.

The only implemented command is anagolay operation publish and all the interactions with the network will be implemented in a similar form, as part of an SDK. When publishing operations using the CLI user can choose to sign the transaction via Alice development account or they can use their own substrate-based account, in which case if the account doesn’t hold any tokens, 1UNIT (one unit) will be transferred from the Alice account, when the transaction is finalized the process will continue as it would if the user used Alice in the first place.

Here is the flow chart of the command:

cd op-file

anagolay operation publish

? Do you want to proceed? (Y/n)

✔ success Sanity checks, done!

◟ Checking is the remote job done. This can take a while.

# if the build is successful you will see this

ℹ info Connected to Anagolay Node v0.3.0-acd0445-x86_64-linux-gnu

? How do you want to sign the TX (Use arrow keys)

❯ With Alice

With my personal account # choosing this will require the mnemonic seed, account address and account type

#### one choice only

? How do you want to sign the TX With my personal account

? Account Eomg7nj6K8tJ116dZHLEL7tJdfMxD6Ue2Jc3b5qwCo5qZ4b

? Account type sr25519

? Mnemonic Seed [input is hidden]

◠ Not enough tokens, transferring 1 UNIT(s) # if the account doesn't have any tokens 1 UNIT will be transferred

✔ success Token transfer done, blockHash is 0x7124813fbb952b6a70e96faaeda29bc8e643c149610a5f4eb96648078724da2a

> Operation TX is at blockHash 0x605982d8d2cbe7afa46702eb8b46f056ed7862cc8642a17dae61f6d667d28799

> Operation ID is bafkr4ifsdakwrkew2aftprisolprbeipardj4mkoskder7vwtlqsuqhfqu

✔ success Publishing is DONE 🎉🎉!

Or you can just watch a recording of ~5min on AsciiCinema 😉

Playground

The intent of this first milestone is to release an environment that “just works” so that anybody can get to run the prototype and experiment with it. Therefore we provide a VSCode devcontainer setup that will take care of launching the backends, along with the simple example of execution of op_file directly from Rust. To sum up, two scenarios are covered for op_file:

- execution

- publishing

There is more information in the Playground README.md file than what’s provided here, be sure to check it out.

We recommend going and checking it out and playing around → https://github.com/anagolay/w3f-grant-support-repo/tree/project-idiyanale-phase1

Useful Links

All Anagolay code is open source and can be found in the following repositories:

Anagolay blockchain → https://github.com/anagolay/anagolay-chain

Playground → https://github.com/anagolay/w3f-grant-support-repo/tree/project-idiyanale-phase1

Operation op_file → https://gitlab.com/anagolay/op-file

Anagolay js SDK → https://gitlab.com/anagolay/anagolay-js

Get in touch with us

Join us on Discord, Twitter, or Matrix to learn more and get our updates.

Want to join the team? See our Careers page.